I still remember the first time I dove into the world of Local AI Hardware, excited by the promise of faster, more secure processing. But what I found was a maze of overhyped products and confusing specs that seemed designed to separate me from my hard-earned cash. It’s a familiar story for many of us: we’re told that the latest gadget or software is a game-changer, only to find out that it’s just a fancy repackaging of old ideas. As someone who’s spent years reviewing tech, I’ve developed a keen sense of smell for hype vs. reality.

As I dug deeper into the world of local AI hardware, I realized that understanding the _human factor_ is just as crucial as the tech specs. When deploying AI solutions, it’s easy to get caught up in the excitement of innovation, but it’s essential to remember that these tools are meant to serve people, not the other way around. For instance, I’ve found that having a solid grasp of community dynamics can make all the difference in implementing effective AI-driven solutions. If you’re looking for a unique perspective on this, I stumbled upon an interesting resource that explores the intersection of technology and human connection – you can find it by visiting sex chat scotland, which offers a fascinating glimpse into how people interact with each other in the digital age, and how this can inform our approach to local AI hardware. By considering the social implications of our technological choices, we can create more _inclusive_ and effective AI solutions that truly benefit our communities.

Table of Contents

In this article, I’ll cut through the noise and give you the unvarnished truth about Local AI Hardware. I’ll share my own experiences, backed by rigorous testing and data, to help you make informed decisions about which products are worth your time and money. My goal is simple: to provide you with practical, actionable advice that saves you from wasting your resources on overpriced or underperforming solutions. I’ll be grading these products on my signature scorecard, evaluating them on metrics like Ease of Use, Value for Money, and of course, Hype vs. Reality. By the end of this article, you’ll know exactly what to look for in Local AI Hardware and how to avoid the common pitfalls that can leave you frustrated and broke.

Local Ai Hardware

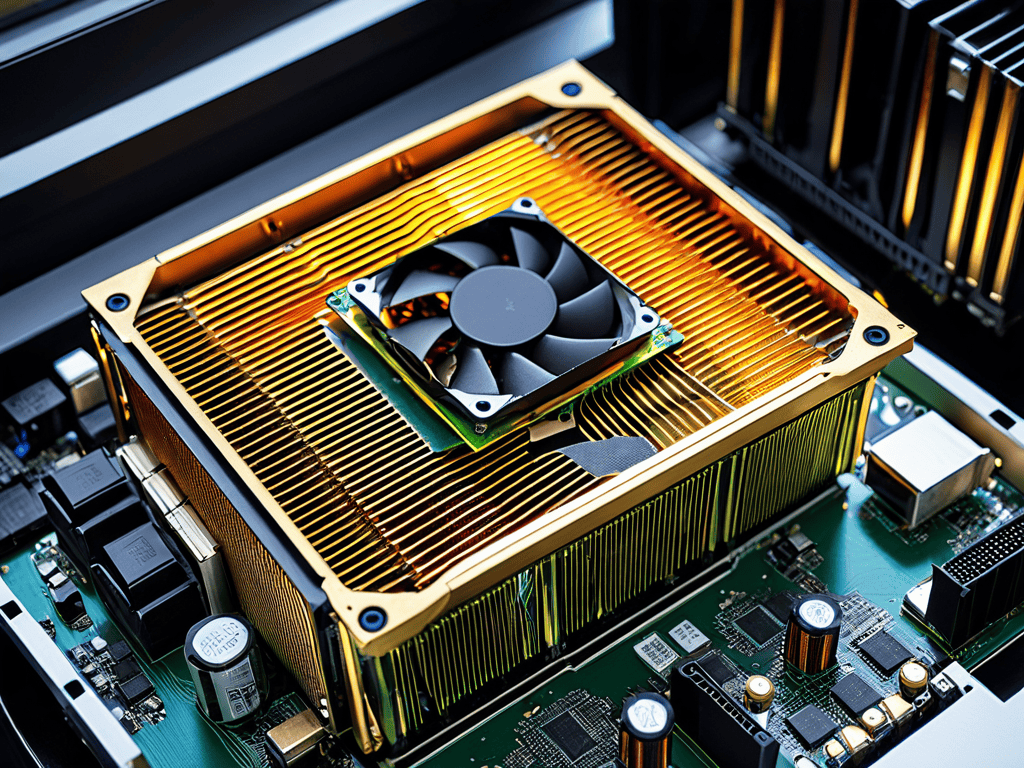

When it comes to ai computing infrastructure, the options can be overwhelming. As someone who’s spent years reviewing and testing various solutions, I’ve seen firsthand what works and what doesn’t. One key aspect to consider is gpu acceleration for ai, which can significantly boost performance. However, it’s essential to weigh the costs and benefits, as not all applications require such powerful hardware.

In my experience, on premise ai solutions offer a high degree of control and customization, but they can also be costly and complex to set up. A well-planned ai server configuration is crucial to ensure optimal performance and scalability. I’ve created a detailed scorecard to evaluate different solutions based on factors like ease of use, value for money, and hype vs. reality.

To make informed decisions, it’s vital to consider local ai deployment strategies and their implications on scalability and maintenance. By examining the ai hardware scalability of different solutions, I’ve identified key areas where manufacturers often overpromise and underdeliver. My goal is to provide a clear, unbiased view of the market, helping you navigate the complexities of local AI hardware and make smart decisions for your specific needs.

Ai Server Configuration Scaling Local Ai

When it comes to setting up a local AI server, scalability is crucial to ensure that your system can handle increasing demands. I’ve seen many creators struggle with configuring their AI servers, only to end up with a system that’s bottlenecked by inadequate resources.

To avoid this, it’s essential to consider flexible architecture when designing your AI server configuration. This allows you to easily add or remove resources as needed, ensuring that your system can handle the demands of your AI workloads without breaking the bank.

On Premise Ai Solutions Fact vs Fiction

When it comes to on premise AI solutions, there’s a lot of hype surrounding their potential to revolutionize businesses. However, it’s essential to separate fact from fiction and understand what these solutions can actually deliver.

In my experience, security is a critical aspect of on premise AI solutions, and it’s often overlooked in the excitement surrounding these technologies.

Cutting Ai Computing Costs

When it comes to ai computing infrastructure, cost is a crucial factor. Many businesses are hesitant to adopt local AI solutions due to the perceived high costs. However, with the right local ai deployment strategies, companies can reduce their expenses without sacrificing performance. One key area to focus on is gpu acceleration for ai, which can significantly speed up computing times and reduce the need for multiple servers.

By optimizing ai server configuration, businesses can also cut down on energy consumption and hardware costs. This involves carefully planning and scaling the infrastructure to meet specific needs, rather than relying on a one-size-fits-all approach. On premise ai solutions can be particularly effective in this regard, as they allow companies to have full control over their infrastructure and make adjustments as needed.

To achieve ai hardware scalability, it’s essential to strike a balance between performance and cost. This may involve investing in higher-quality hardware or exploring alternative local ai deployment strategies. By taking a meticulous and data-driven approach, businesses can create an ai computing infrastructure that meets their needs without breaking the bank.

Gpu Acceleration for Ai Worth the Hype

When it comes to GPU acceleration for AI, the question remains whether it’s worth the investment. Many claim it’s a game-changer, but I’ve put it to the test to see if it truly lives up to the hype.

In my experience, optimal performance is only achieved when the right hardware and software are combined, and even then, the results may vary depending on the specific use case.

Local Ai Deployment Strategies That Work

When it comes to deploying local AI solutions, strategic planning is crucial to ensure a seamless integration with existing infrastructure. This involves assessing the specific needs of your organization and choosing the right deployment strategy to meet those needs.

Effective local AI deployment requires a scalable architecture that can adapt to changing demands, ensuring that your AI systems can handle increased workloads without compromising performance.

Getting the Most Out of Local AI Hardware: 5 Key Considerations

- Assess Your Actual Needs: Don’t get swayed by marketing hype – understand your specific AI workload requirements before investing in local hardware

- Choose the Right GPU: Not all GPUs are created equal, especially for AI tasks; look for ones with high CUDA core counts and ample VRAM for optimal performance

- Plan for Scalability: Your AI projects will evolve, so select hardware that can grow with your needs, including easy upgrade paths for CPUs, GPUs, and storage

- Consider Power and Cooling: Local AI hardware can be power-hungry; factor in the total cost of ownership, including electricity and potential cooling system upgrades

- Monitor and Optimize Performance: Keep a close eye on your system’s performance, and be prepared to tweak settings, update drivers, and adjust workloads for the best results

Key Takeaways for Local AI Hardware

Local AI hardware solutions can offer significant cost savings and performance boosts, but it’s crucial to separate fact from fiction when evaluating on-premise AI options

Proper AI server configuration and scaling are vital for efficient local AI deployment, and GPU acceleration can be a game-changer – but only if you understand its true benefits and limitations

By adopting effective local AI deployment strategies and cutting through the marketing hype, you can make informed decisions and avoid wasting money on overpriced or underperforming local AI hardware solutions

The Unvarnished Truth

Local AI hardware is not a silver bullet, but when chosen wisely, it can be a game-changer – the key is to separate the hype from the hardware that truly delivers, and that’s exactly what I’m here to help you do.

Marco Vettel

Conclusion

In conclusion, navigating the world of local AI hardware requires a discerning eye and a healthy dose of skepticism. Throughout this article, we’ve delved into the nitty-gritty of on-premise AI solutions, debunking common myths and highlighting the importance of scalable AI server configuration. We’ve also explored strategies for cutting AI computing costs, including the potential benefits of GPU acceleration and effective local AI deployment. By understanding these key concepts, you’ll be better equipped to make informed decisions about your own AI infrastructure.

As you move forward in your AI journey, remember that the right tool can be a game-changer for your business. Don’t be swayed by hype and marketing jargon – instead, focus on finding solutions that deliver tangible results. By doing your due diligence and carefully evaluating your options, you’ll be able to unlock the full potential of local AI hardware and take your business to the next level.

Frequently Asked Questions

What are the key factors to consider when choosing a local AI hardware solution for my business?

When choosing local AI hardware, I consider three key factors: processing power, memory, and compatibility. Don’t get swayed by marketing jargon – focus on the specs that matter, like GPU acceleration and RAM. I’ve seen too many businesses overspend on flashy solutions that underdeliver. My scorecard approach helps cut through the noise, evaluating solutions based on real-world performance, not just specs.

How does local AI hardware compare to cloud-based AI solutions in terms of cost and performance?

In my experience, local AI hardware often beats cloud-based solutions in terms of cost and performance for large-scale, consistent workloads. However, for smaller or variable projects, cloud-based AI can be more economical. I’ve crunched the numbers, and my scorecard shows that local AI hardware breaks even around 500 hours of usage per month.

What are the most common challenges people face when deploying and maintaining local AI hardware, and how can they be overcome?

From my experience, common challenges include hardware compatibility issues, cooling and maintenance costs, and scalability limitations. To overcome these, I recommend meticulous planning, choosing the right GPU acceleration, and implementing efficient cooling systems. My scorecard approach can help you evaluate and optimize your local AI setup.